Artificial intelligence, or AI, is a technology that has been rapidly advancing in recent years and is becoming increasingly integrated into our daily lives. From personal assistants like Siri and Alexa, to self-driving cars and intelligent home appliances, AI is changing the way we live and work.

But, it's important to note that the AI we use today is vastly different from what it was in the past. In fact, the history of AI dates back to the 1960s and it has undergone a lot of changes and overcome many obstacles to reach where it is today. Today, we will be discussing the rise of this technology and how it will change our lives in the future.

So please take a seat, as this will be an in-depth discussion on the topic of AI. We will be delving into the history, advancements, and future potential of this technology. We hope you find it informative and enjoyable.

🧠 The 60's: Rosen's concept of Perceptron

Frank Rosen, a British computer scientist, was one of the pioneers in the field of artificial intelligence and neural networks. In 1960, he published a paper titled "Programming a Computer for Playing Chess" in which he proposed the use of artificial neurons for deep learning.

In this paper, he described how a computer could be programmed to learn from experience and make decisions based on that learning, using a model of the human brain as inspiration. He proposed the use of artificial neurons, which he called "perceptrons," as the basic building blocks for this type of computer system.

This work laid the foundation for the development of deep learning algorithms and paved the way for the current generation of neural networks used in artificial intelligence and machine learning.

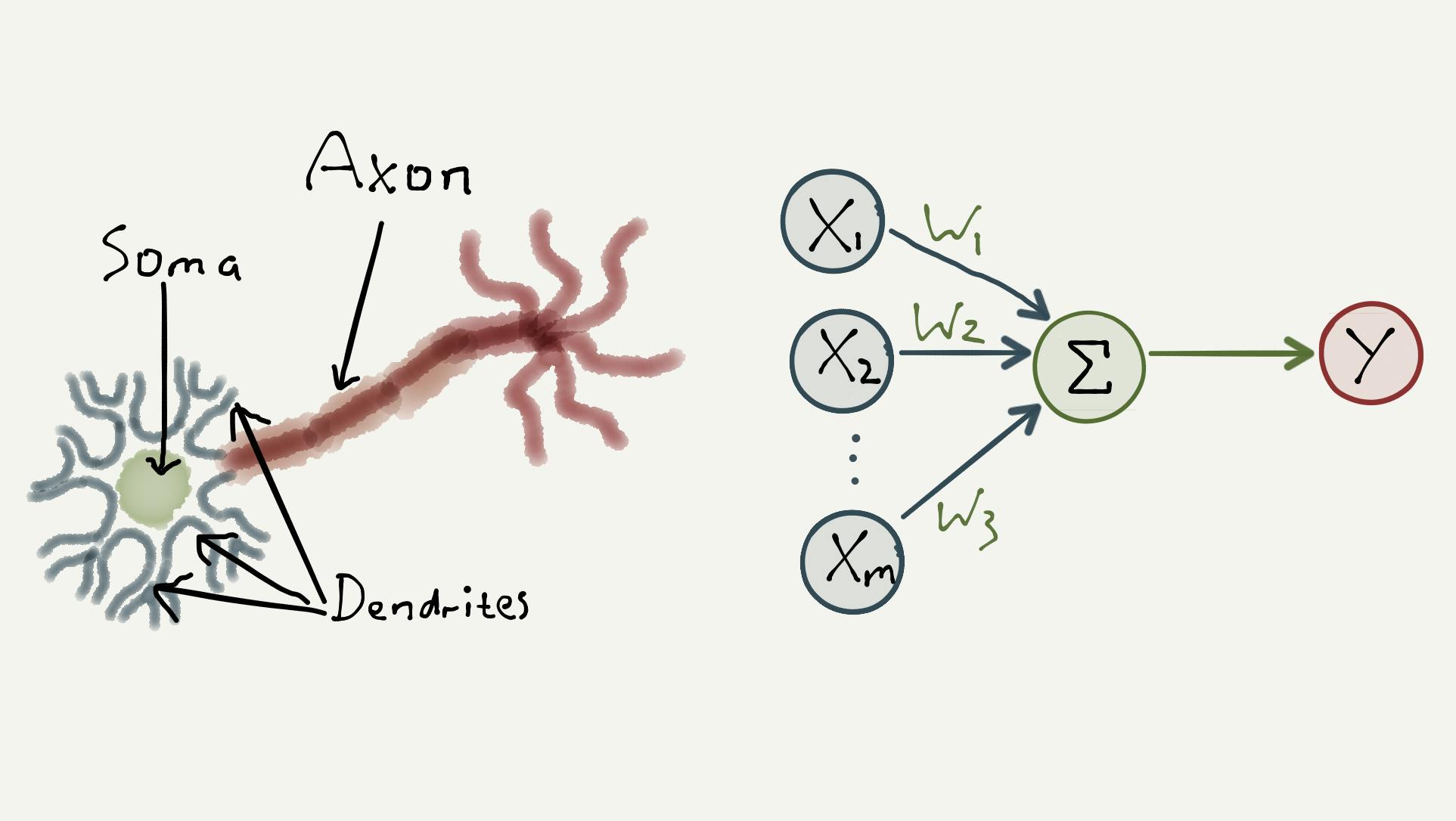

🖼 A Human Neuron compared to Deep Learning Network's Perceptron

Frank Rosen's perceptron, which was one of the first artificial neural networks developed, was one of the unique works of that time. But it had several limitations and disadvantages:

The major disadvantage of the perceptron was its inability to solve linearly non-separable problems. The perceptron was only able to classify patterns that were linearly separable, meaning that the patterns could be separated by a straight line. This limited the perceptron's ability to solve more complex problems, such as those involving multiple classes or non-linearly separable patterns.

Another disadvantage of the perceptron was its lack of feedback mechanisms. The perceptron was only able to process input patterns in a feedforward manner, meaning that it could not adjust its weights based on previous input patterns or errors. This made it difficult for the perceptron to learn and adapt to new information.

In addition, the perceptron was also limited in its ability to handle noise or errors in the input data. The perceptron's output was highly sensitive to small variations in the input, making it prone to errors and reducing its overall accuracy.

Finally, the perceptron algorithm was computationally intensive and required large amounts of data to train effectively. This made it difficult to use the perceptron for real-time applications or problems with limited data.

The algorithm's shortcomings led to the 'First AI Winter,' a period of decreased funding and interest in the field of artificial intelligence. However, the breakthroughs made by Rosen in the use of artificial neurons for deep learning paved the way for the development of multi-layer perceptrons and convolutional neural networks, which have since become important tools in the field of artificial intelligence and machine learning.

❄1970: The First AI Winter

The first "AI Winter" was a period of reduced funding and interest in the field of artificial intelligence that occurred in the late 1970s and early 1980s. This period was characterized by a decline in funding and support for AI research, as well as a decrease in the number of AI-related publications and conferences.

The AI Winter was caused by several factors, including overhyped expectations for the capabilities of AI, a lack of progress in achieving certain AI milestones, and a lack of practical applications for AI technology. Additionally, the high costs of AI research and development, as well as the lack of a clear path to commercialization, also contributed to the decline in funding and support for AI during this period.

During the AI Winter, many AI researchers and companies left the field, and funding for AI research decreased significantly. However, by the mid-1980s, advances in computer technology, such as the development of faster and more powerful computers, as well as the emergence of new AI techniques, such as neural networks, helped to revive interest in AI and paved the way for a resurgence of the field in the 1990s, which we will be talking in the next sections.

This period is seen as a reminder of the importance of realistic expectations and the need for steady progress to sustain long-term funding and interest in a field.

⭐1980: Introduction of Backpropagation

The paper "A Method for the Solution of Certain Problems in Adaptive Control and Signal Processing" was published in 1970 by Paul Werbos, and introduced the Backpropagation Algorithm. This algorithm solved most of the limitations that were faced by perceptrons.

🖼 Working of the Backpropagation Algorithm

Backpropagation is a widely used algorithm for training artificial neural networks, particularly in supervised learning tasks. It allows the network to adjust its weights and biases based on the error between the network's output and the desired output. This allows the network to learn from the data and improve its accuracy over time.

The Backpropagation algorithm is based on the gradient descent method, which is used to minimize the error between the network's output and the desired output. The algorithm uses the chain rule of calculus to calculate the gradient of the error concerning the network's weights and biases and then updates the weights and biases to reduce the error.

The Backpropagation algorithm is widely used in a variety of neural network architectures, such as multi-layer perceptrons and convolutional neural networks, and has been applied to many different applications, such as image and speech recognition, natural language processing, and control systems.

Backpropagation has been a major breakthrough in the field of artificial intelligence and machine learning, and its publication marked the start of a new era of research in artificial neural networks and deep learning. But there were still some issues with this algorithm that no one saw it coming.

❄The 90's: The Second AI Winter

The second "AI Winter" occurred in the late 1980s and early 1990s, and it was characterized by a decline in funding and support for artificial intelligence (AI) research, as well as a decrease in the number of AI-related publications and conferences.

One of the main reasons for this second AI Winter was the revelation of errors and limitations in neural network research, particularly regarding initialization. Researchers discovered that the initialization of weights in neural networks could greatly affect the performance of the network and that poor initialization could lead to poor results.

Additionally, researchers found that the neural networks at that time were overfitting the training data and not generalizing well to new data. This led to a lack of trust in neural networks and a decline in funding for AI research.

During this time, everyone left the hope that this technology would be advanced in the upcoming years. But everything changed when Geoffrey Hinton published his latest findings on Deep Learning

🧠2006: Deep Belief Nets

The rise of deep learning in 2006 is often attributed to a breakthrough paper published by Geoffrey Hinton, Simon Osindero and Yee-Whye Teh, entitled "A fast learning algorithm for deep belief nets". This paper introduced a new approach to training deep neural networks called "deep belief networks" (DBNs) which uses unsupervised pre-training followed by fine-tuning with labelled data.

🖼 Working of the Deep Belief Networks

Before this breakthrough, training deep neural networks was difficult because of the "vanishing gradients" problem, which meant that the error signals used to update the weights in the network would become very small as they propagated through the layers of the network, making it difficult for the network to learn. The DBNs approach introduced in this paper used unsupervised pre-training to overcome this issue and allowed for deep neural networks to be trained effectively.

This approach led to significant improvements in the performance of neural networks on a variety of tasks, such as image and speech recognition, natural language processing, and drug discovery. The success of this paper inspired a renewed interest in deep learning and led to a resurgence in research in this area.

The rise of deep learning in 2006 also saw the development of new techniques such as convolutional neural networks (CNNs) and recurrent neural networks (RNNs) which further improved the performance of deep neural networks and expanded their capabilities.

🏆 2011: First Success for AI

In 2011, a competition called the ImageNet Large Scale Visual Recognition Challenge (ILSVRC) made deep learning famous. The competition, organized by the ImageNet project, aimed to improve the state-of-the-art in object recognition by challenging teams to develop algorithms that could accurately classify images from a large dataset of over 1 million images.

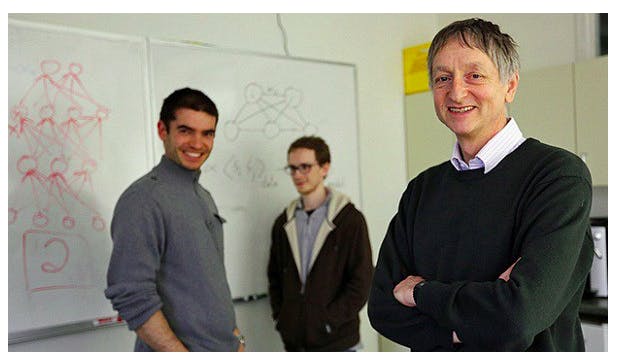

🖼Krizhevsky, Sutskever and Hinton

One team, led by Alex Krizhevsky, Ilya Sutskever, and Geoffrey Hinton, used a deep convolutional neural network (CNN) to achieve a significant improvement in the accuracy of image recognition. The team's CNN, called AlexNet, outperformed the previous state-of-the-art by a large margin, achieving an error rate of 15.3% compared to the second-best result of 26.2%.

If you want to learn more about Alex Krizhevsky's AlexNet Architecture you can check out my blog on a similar topic, just follow the link - AlexNet Architecture Explained

This achievement attracted a lot of attention in the field of artificial intelligence and machine learning, and deep learning began to gain widespread recognition as a powerful tool for image recognition and other tasks. The success of AlexNet and the ILSVRC competition sparked a renewed interest in deep learning and led to a surge in research in this area.

👁 2017: Attention is all you need

The AI products and tools that are been developed in today's world are just because of the creation of this one architecture. That changed the perspective of the way AI tools developed.

The rise of transformers in deep learning can be traced back to the publication of the paper "Attention Is All You Need" in 2017 by Google researchers Vaswani et al. This paper introduced transformer architecture, a neural network architecture that uses self-attention mechanisms to process sequential data such as natural language.

🖼 Working of a Transformer (Text Translation Domain)

The transformer architecture uses a mechanism called self-attention, which allows the network to focus on specific parts of the input sequence while processing it. This mechanism allows the network to learn long-term dependencies, which are important in tasks such as natural language understanding and machine translation.

The transformer architecture was also able to achieve state-of-the-art results on several natural language processing tasks, such as machine translation, language modelling, and question answering. This was a significant breakthrough in the field of natural language processing and deep learning.

Since then, transformers have been widely adopted in various natural language processing tasks and have been used in many state-of-the-art models such as BERT, GPT-2, and T5. The transformer architecture has also been applied to other fields such as computer vision and speech recognition with great success.

🤖 2022: ChatGPT and Stable Diffusion

The year 2022 was a massive success for artificial intelligence technology. We have seen various publications like Galactica, ChatGPT and Stable Diffusion trending and started to get integrated into various platforms.

🖼 Image generated from Stable Diffusion Model.

Here are a few honourable advancements in artificial intelligence (AI) in 2022:

Generative models: Generative models such as Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs) have made significant progress in creating realistic images, videos, and audio. These models can be used for tasks such as image and video synthesis, image and video editing, and style transfer.

Explainable AI: There have been advances in Explainable AI (XAI) techniques, which provide insights into the decision-making process of AI models. This allows for more transparency and accountability in the decision-making process of AI systems, which is particularly important in sensitive areas such as finance and healthcare.

Robotics: Robotics has made significant strides in 2022, with the development of more advanced robots that can perform tasks such as manipulation, grasping, and grasping. These robots are being used in fields such as manufacturing, agriculture, and healthcare.

Natural Language Processing: The field of natural language processing has continued to advance with the development of more advanced models such as transformer-based models, which can perform tasks such as language translation, question answering, and text summarization.

Reinforcement Learning: Reinforcement Learning (RL) has been making significant progress in 2022, with the development of more advanced algorithms and architectures that can handle complex and dynamic environments. This has led to the development of RL-based systems that can perform tasks such as game-playing and robotics.

🚀 The Future

The future of artificial intelligence (AI) is likely to bring about a wide range of technological advancements, with the potential to revolutionize many industries and aspects of everyday life. Some of the key areas of focus for AI in the future include:

General AI: Developing AI systems that can perform a wide range of tasks, similar to human intelligence. This will require significant advancements in areas such as natural language understanding, problem-solving, and decision-making.

Human-AI collaboration: Developing AI systems that can work alongside humans, augmenting human capabilities and increasing efficiency in various tasks. This will require advancements in areas such as natural language interaction, perception, and decision-making.

AI-based decision-making systems: AI systems will be used to assist with decision-making in various fields such as finance, healthcare, and transportation. This will require advancements in areas such as machine learning, natural language processing, and computer vision.

Robotics: Advances in AI will lead to the development of more advanced robots, which will be used in a wide range of applications such as manufacturing, agriculture, and healthcare.

Edge AI: With the increase of IoT devices and the need for real-time data analysis, AI will be deployed at the edge of the network, where data is generated. This will require advancements in areas such as low-power AI and edge computing.

Quantum AI: AI will utilize the power of quantum computing to speed up the training process, making it more efficient and accurate.

Overall, the future of AI is expected to bring about significant advancements in technology, with the potential to greatly improve the efficiency and effectiveness of many industries and aspects of everyday life.